LLM Study Notes

Document what I learnt from a deep dive video on LLM

Video

Gathering data

Goal: Gather clean, useful text data from arbitrary sources (website, books,…).

Procedure: Get all the possible text and concatenate them together to create an enormous text.

Reference HuggingFace’s FineWeb

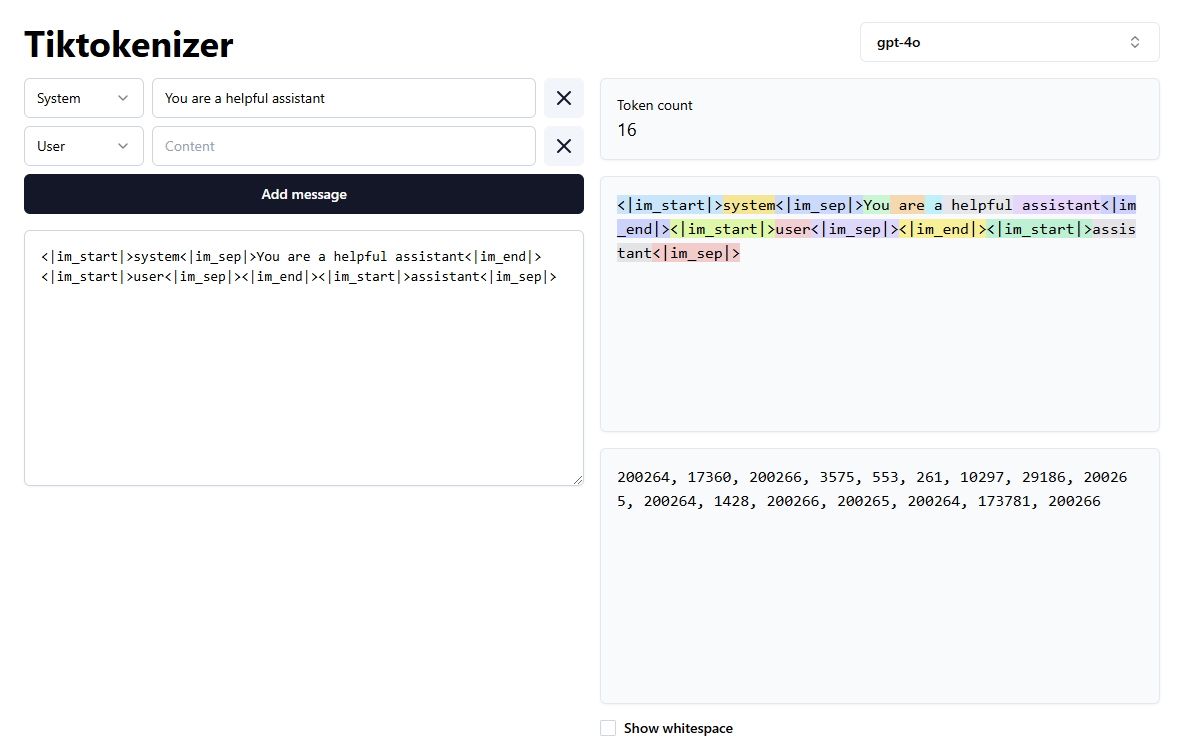

Tokenization

Goal: Reduce the size of the text gathered while preserving the meaning behind it.

Procedure:: Using the Byte Pair Encoding algorithm, recognize repeating patterns and encodes them as a single token.

E.g.:

Reference BPE Algorithm Tiktokenizer

Training the language model

Goal: Extract the knowledge behind the text by training the neural network on the tokenized text and tune the parameters. The model trained here will be called the BASE model.

Procedure: Using machine learning, train the neural networks so that it can statistically predict what token comes next after a sequence (window) of tokens.

E.g.:

Input text = “I view news” => Token window = “333 255” Prediction = “992” = “Article” - 3%

Inference

Goal: Generating a sequence of tokens from the trained model (generating an answer).

Procedure: Infer a starting token then merge that token with the input to generate the next token so that it builds subsequent tokens based on generated ones.

E.g.:

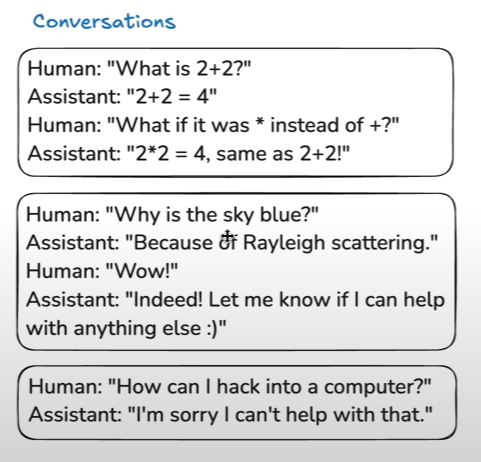

Post-training tuning

Goal: Turn the BASE model into a more helpful assistant.

Key takeaway

The way LLM works is by PREDICTING the most likely token to appear in the answer.

When a user chats with a LLM, the model runs a simulation based on its trained data and user’s input.

As you will see below, the model is enhanced by human experts’ labelling.

It finds what human experts will most likely say when presented with user’s input.

Hence, the model is not CRAFTING its own answer.

Multiple methods:

- Embed some starting tokens when model starts up so that it is more likely to produce related information.

E.g.:

Feed the model information about itself so that when being asked it has an idea.

- Using conversations to further tune the model’s answer (i.e. let the model takes on the personality). The conversation can either be syntactic (machine-generated) or crafted by human experts.

E.g.:

Video’s timestamp on this matter

- Use special tokens to allow models to use external tools like searching…

Video’s Note

All credits go to the youtube video’s owner at Youtube Link

Comments powered by Disqus.